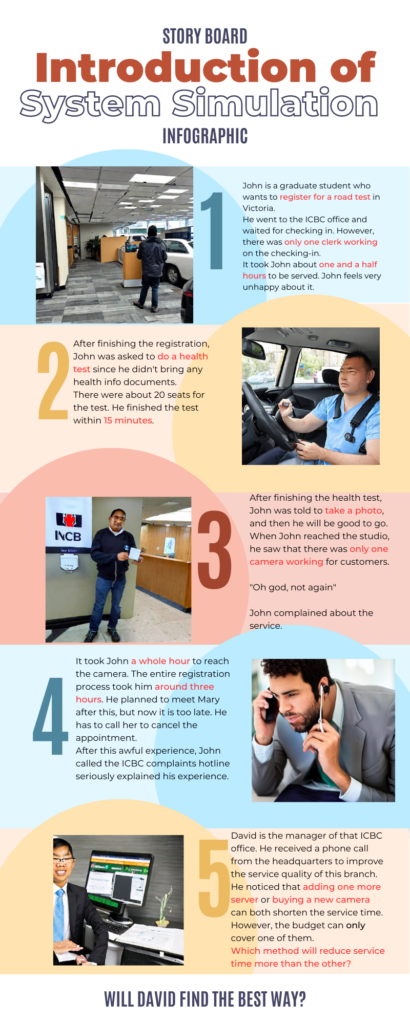

Storytelling is one of the powerful skills that educators can use to illustrate ideas and concepts. When I was learning system analysis in UVic, the majority of the contents are hard to understand. For example, “a complex queueing system linked in parallel, and all of them connect to a single server with another queueing system.” “The system is stable with arrival rate λ.” I had a very hard time remembering and understanding the concept of these words. However, if I use the story-telling method. The story will be like this. “Jerry is approaching the YVR airport and his flight is going to take off in a short time.” “There are many checking-in counters and Jerry just randomly chooses the one besides the red flower.” “All of the counters are connecting the security check section so that Jerry doesn’t worry about it.” “Passengers from all checking-in counters must wait in a single line for security check.” I think even if students have no idea about the system analysis, they can still understand the concept easily with this story. Here is a storyboard with a similar condition.

The storyboard design is the preliminary work for the story video. A good story design should not be too long and have emphases. I used red colour to show the important section so that later in video making part, I can put more effort into these parts. This reflects the guidelines of video making in week 9 contents and also reviewed the week 5 design principles. When I think deeply about the storytelling strategy, I noticed that the core idea of the story in education is lower the understanding level and enhance students’ memory. I think it is a good example of Merrill’s First Principles of Instruction. “Learners are engaged in solving real-world problems” and “Existing knowledge is activated as a foundation for new knowledge.”

When I explore AI tools, I found many interesting things. First, as we can see in the image #1 storyboard, photos inside each section are generated by the AI site Stable Diffusion. As a computer science student, I only used ChatGPT before for code debugging. I did some small tests to see what result Stable Diffusion can give me. For example, when I tell “A driver is waiting in the ICBC office but has to wait for a very long line. only one clerk is available for them.” I got this result.

It is so funny to see that a bunch of vehicles are waiting in line in an office. I can only see vehicle lines in the McDonald’s drive-through, and that’s definitely not an office. I think the basic concept of these tools is searching ‘Key words’ and merging them together. They do not know the actual meaning of the language. It’s like getting a math problem done with the correct answer 2. The AI can always give me numbers like 1.95 or 1.99. They are not trying to find the answer, they used their database to find the best approach. Some well-trained AI tools like ChatGPT can mostly get a 1.99 answer, but for Stable Diffusion, I’m afraid it can only get a 1.80 answer. This reflects what we talked about in week 8, the limitations of Large Language Models. In the future, I may use AI for repetitive work. For creation work, I might believe in my own experience more than all of the AI tools.

References:

Pengfei Li. (2023/3/12). [The chat is about generating photos with some given conditions]. URL: https://stablediffusionweb.com/#demo